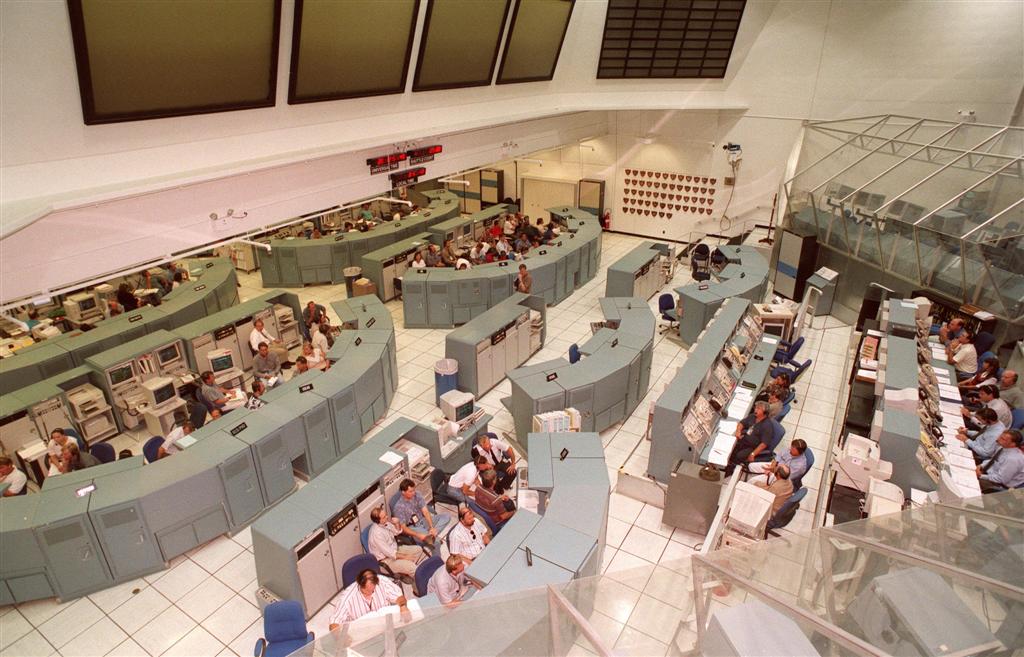

Mission control rooms seem to be obsessed with pixels. We hang huge screens on the walls and surround every operator with as many screens as possible. The newer the control room, the more pixels it's bound to have. It's like we want to completely envelop and immerse our operators in data, replacing the world around them with the world that they're monitoring.

Sorry, this is not the answer.

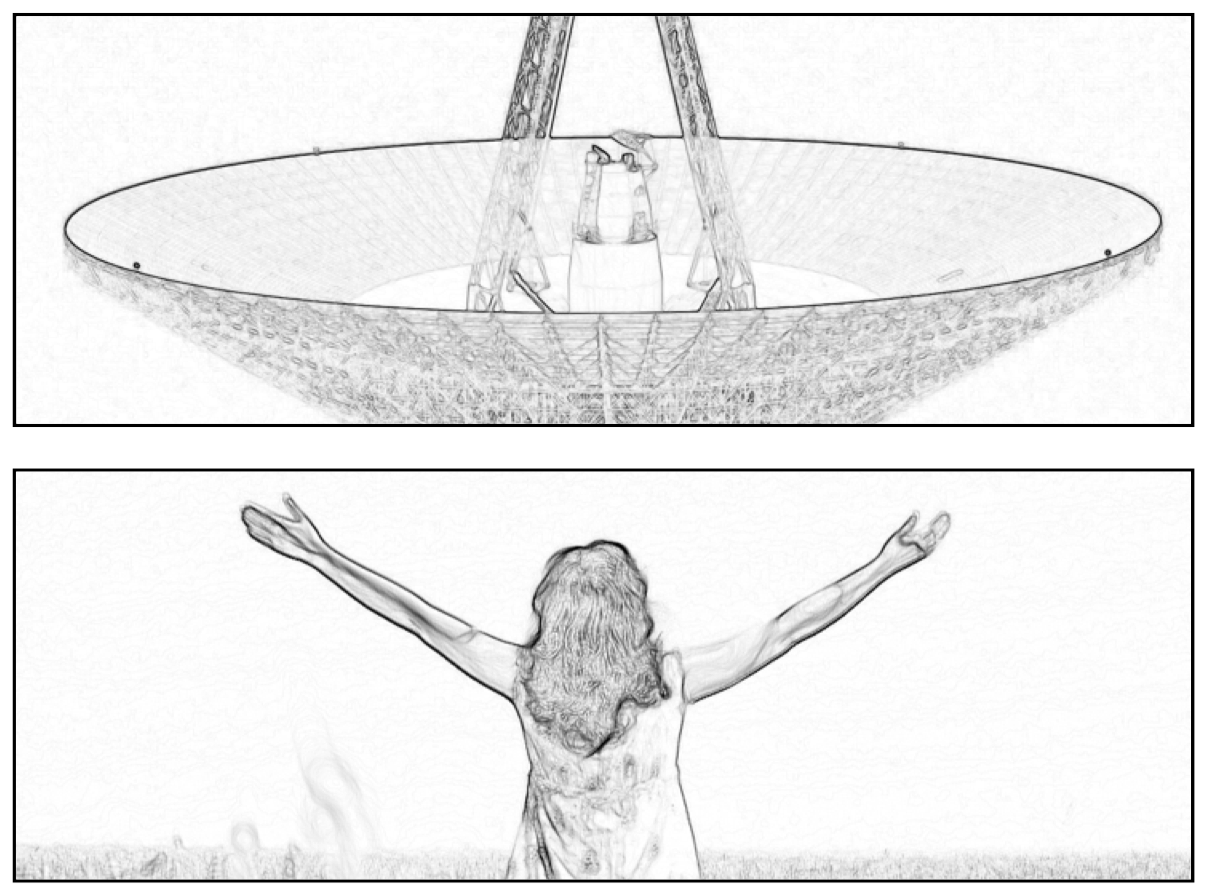

This is how geologists explore the Earth.

While I don't think that more and more monitors are the answer, I believe that immersion is critical for exploration because exploration is fundamentally about presence. Presence is the elusive essence of being in a place, and it's a very powerful thing. All of us are endowed with an amazing innate ability to immediately absorb and understand an environment simply by being present in it. Presence enables us to confidently and accurately form hypotheses, plan actions, and ultimately empowers discovery. That is why almost all human exploration has been accomplished via physical presence in the environment. It's why any geologist will tell you that in order to truly understand the story that an environment on Earth has to tell, you have to go there.

But this is how geologists explore Mars.

“We don’t need to actually visit the canyon - let’s just look at some pictures of it instead.”

Consider, then, the challenge faced by the scientists who help to operate the Curiosity Mars Rover. Physical presence is, for now, beyond our reach. Instead, scientists have to dig through a torrent of 2D images coming back from our spacecraft and piece together a mental model of its environment. 3D renderings are available, but they're almost always viewed on flat monitors. There's nothing natural about this interface, and in 2013 we performed a carefully controlled scientific study that showed that many scientists struggle to understand the martian environment this way.

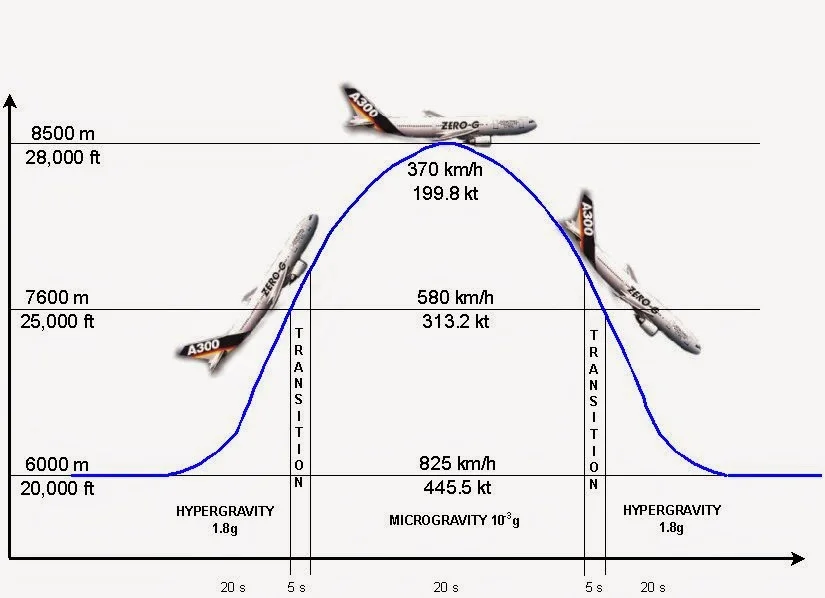

We were confident that virtual reality was part of the answer to this challenge, so we started building systems based on this technology back in 2012. The rebirth of VR hadn't really started yet, so it was tough going at first. The first system we were proud of was built with the Oculus Rift, and the video below summarizes that work.

Looking back three years later, it looks pretty crude. The Oculus Rift didn't have any kind of positional tracking, so we had to hack that on with a Vicon Bonita. We were experimenting with different techniques for reconstructing and rendering the Martian surface - some successful and others... well, let's say it's a good thing we didn't stop here!

We couldn't stop here because even with all of its failings, this system produced dramatic benefits. With high statistical significance, our study showed that scientists using this head-mounted system more than doubled their ability to estimate the distances of objects in the environment and more than tripled their ability to estimate the angles of objects in the environment, all without any familiarity or training with the technology. We continued this work with other partners - first with Sony and Project Morpheus (later Playstation VR), and then with Microsoft and the HoloLens, which marked the birth of the OnSight project. Watch the video below to get the big idea, or have a look at our press release.

I believe that OnSight is a major innovation in the way that we explore Mars. It has been successfully deployed to mission operations for the Curiosity rover team and aspects of it are already being integrated into the operations system for the next rover mission. I think it's also a breakthrough technology for sharing the experience of Mars exploration with the world. We used OnSight to build a limited-engagement museum exhibit called Destination: Mars at the Kennedy Space Center Visitor Complex in Cape Canaveral, Florida. See the video below for more about that, or check out its press release.

See you on Mars!